From coding agents to system operators

Enterprise needs more than coding agents

AI is changing software engineering fast. Tools like Copilot, Cursor, Windsurf, and Claude Code can write, refactor, debug, and explain code with impressive speed. But there’s a basic mismatch. Most of these tools are built for a single developer seat : install an extension, connect an API key, and start prompting.

That's fine for an individual. An enterprise is different: many teams, many repos, strict compliance, real production risk, and a roadmap tied to customer commitments.

Teams are the unit, not individuals

In enterprises, the real unit is the team. Platform, backend, frontend, data, infra, QA, and security teams all operate with different conventions and different risk tolerance.

A serious enterprise agent must be team-aware. It should know who the developer is, which repos and paths they can access, which actions are safe to do automatically, and which ones must go through approvals with an audit trail. Without this, what we see today is shadow AI adoption: developers bring their own keys and workflows, leadership loses visibility, security gets nervous, and finance can't forecast spend.

Tokenomics

Cost governance becomes unavoidable at scale. Leadership doesn’t only ask “does it work?” they ask “how much are we spending, and are we getting value?” Token spend has to be managed like any other shared resource, with budgets and showback at team level.

But spend alone is not the point. Enterprises need to measure effectiveness: are teams actually using the output, how many iterations are needed to get something usable, does review time go down or up, and does AI reduce rework or increase it. If a developer needs eight rounds of prompting for a simple change, the solution is not more tokens it’s better guardrails, better context, and better operating practices.

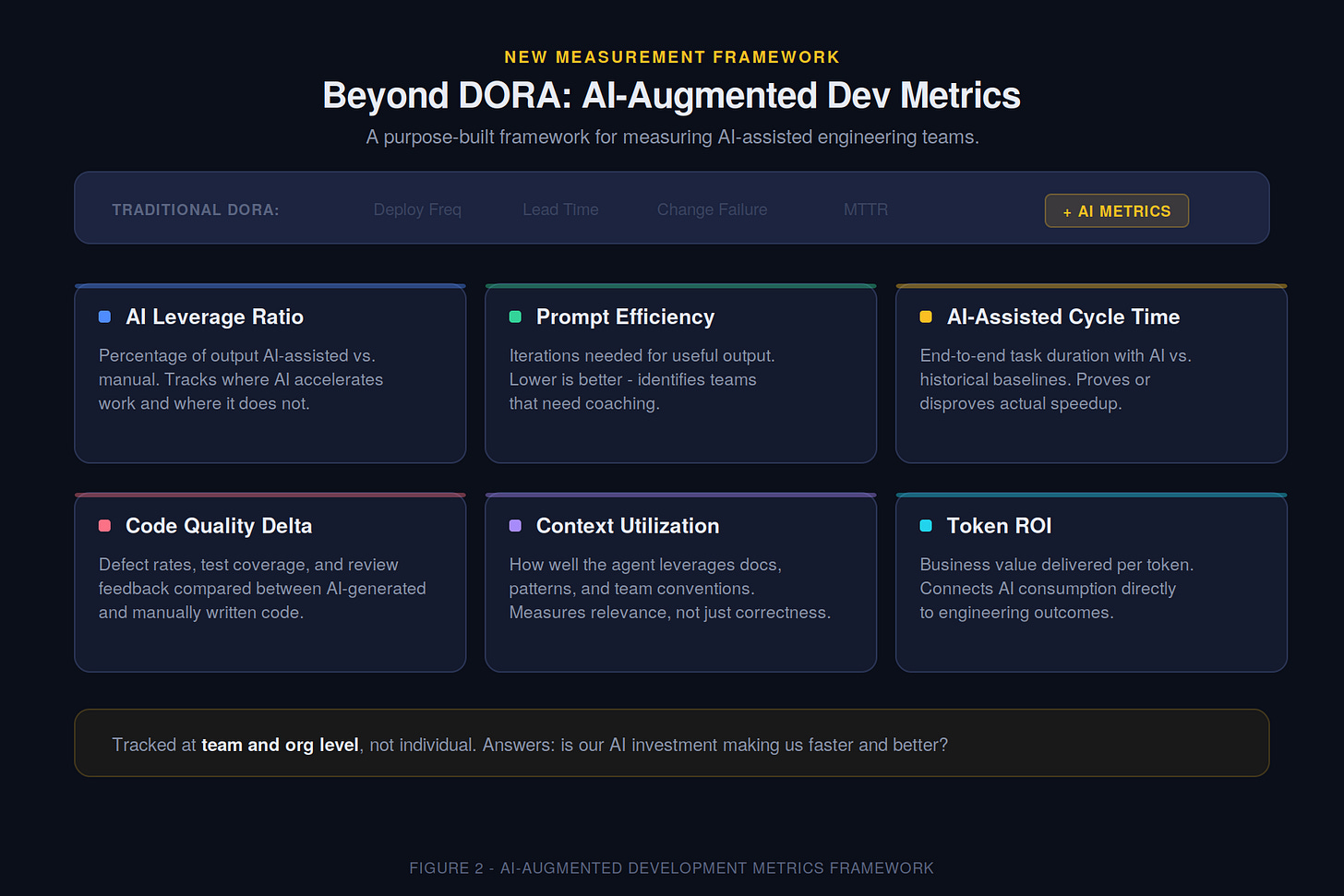

Beyond DORA metrics

DORA metrics are still useful, but AI changes the shape of work. Enterprises care about outcomes: does AI reduce end-to-end cycle time, does it reduce review latency, does it improve or hurt defect rates, and does it generate code that matches the team’s patterns.

If the agent produces “correct” code that doesn’t fit your conventions, it creates more work even if it compiles. Similarly, if AI cuts coding time by 40% but review time doubles because reviewers don’t trust the output, the net cycle time gain is zero or negative.

We think of these as a new set of enterprise AI metrics.

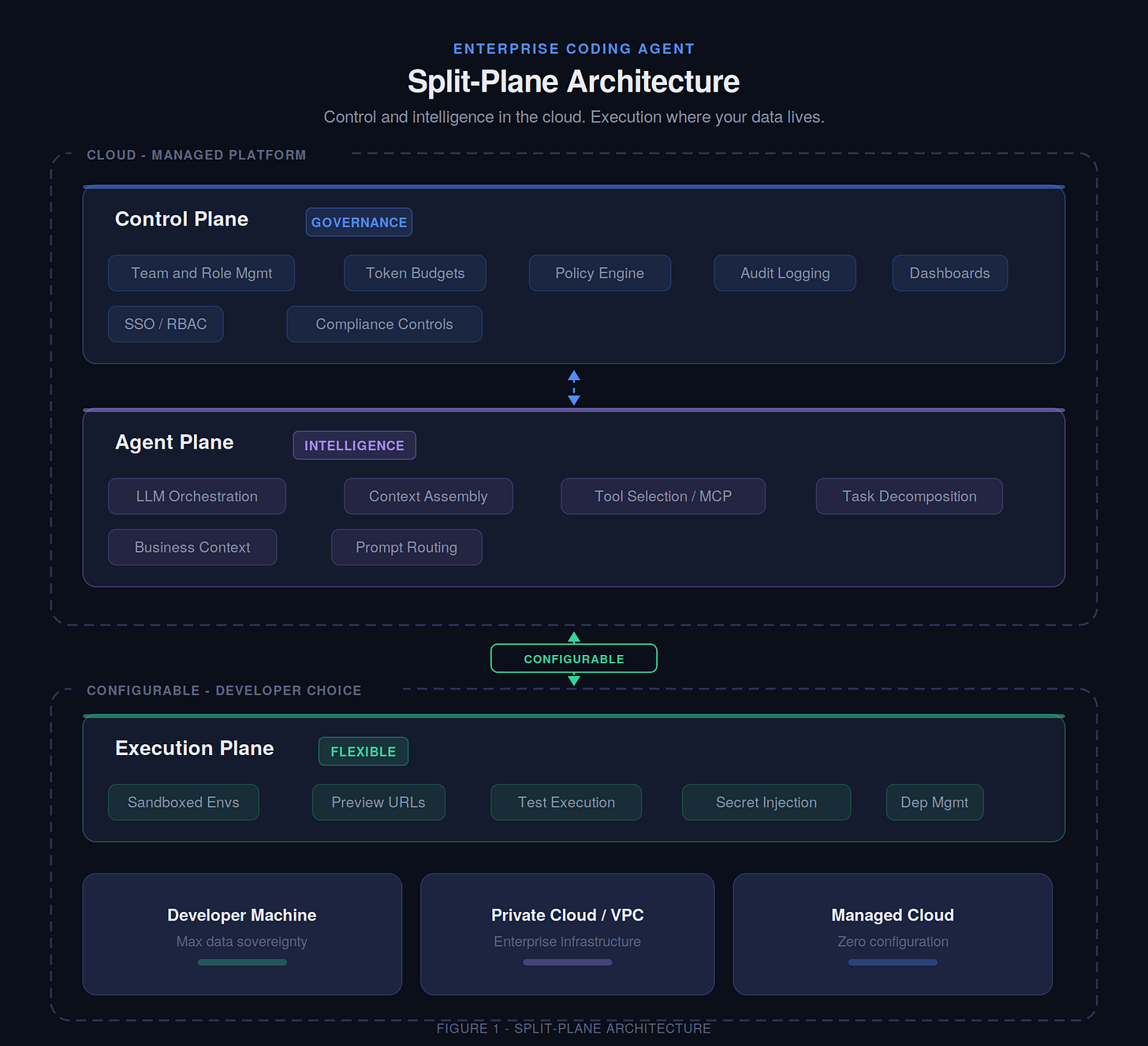

Split-plane architecture

Enterprises need a split-plane design.

The control plane is the organisational brain: identity, policies, approvals, budgets, audit logs, and metrics.

The agent plane does planning, context assembly, and tool orchestration, but it must be deployable depending on sensitivity SaaS for low-risk use, customer VPC for many enterprises, and on-prem/air-gapped for regulated environments.

The execution plane is where builds, tests, migrations, and previews actually run. This must be flexible: local or customer-managed for sensitive code, cloud sandboxes for scale, and hybrid in many real organisations. Also, "code never leaves" is only true if prompts, retrieval, logs, embeddings, and telemetry are kept inside the same boundary otherwise leakage still happens through the side doors.

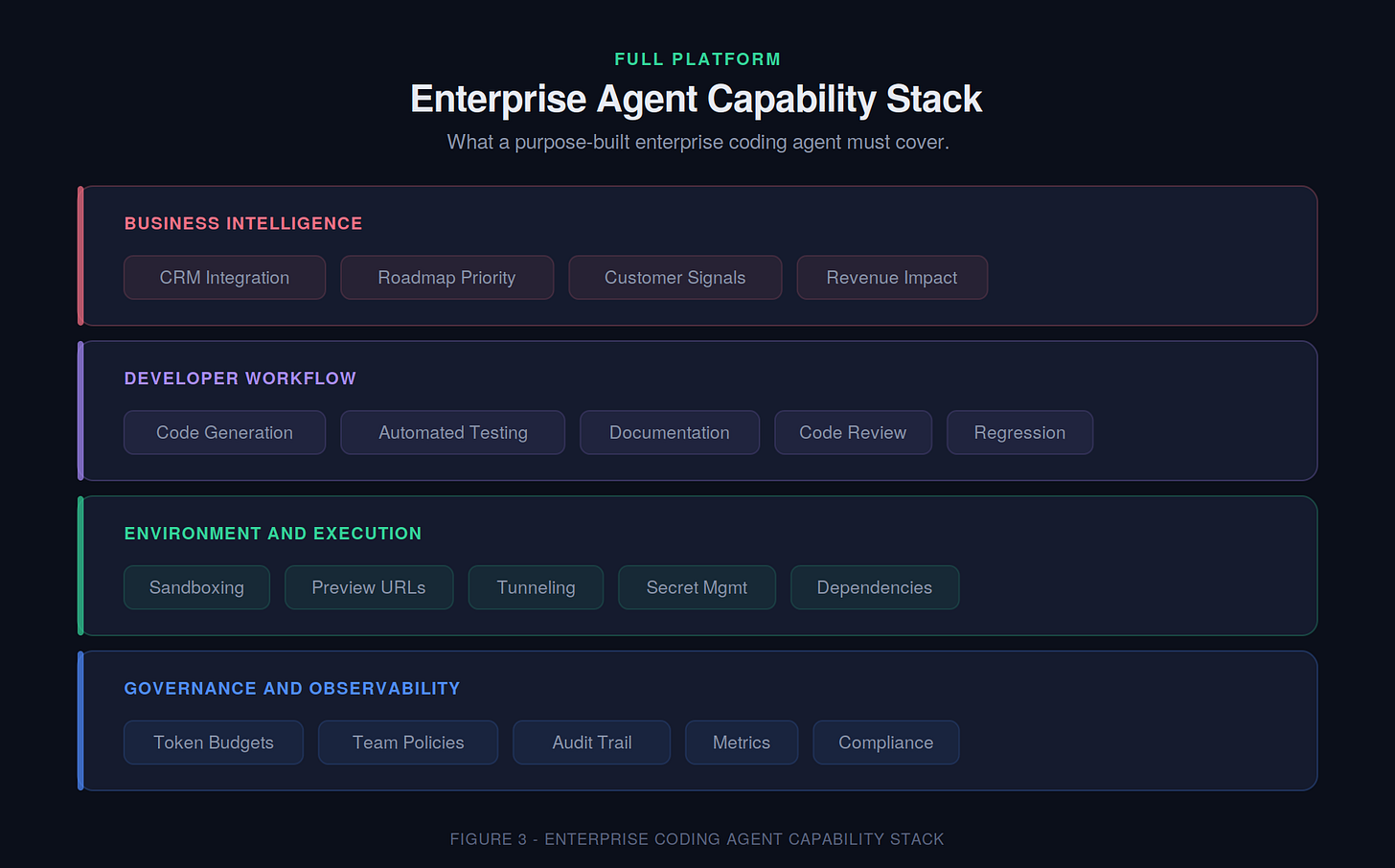

Where today's coding agents still fall short

If you look at today’s tools through an enterprise lens, the gaps are consistent. They treat sessions as individual interactions, not organisational workflows. They treat context as a flat blob instead of layered standards + team conventions + repo patterns, and they don’t clearly separate trusted from untrusted context which is a real prompt injection surface in agentic workflows where the agent pulls in code, docs, and issues from multiple sources.

They also lack strong governance primitives: what the agent is allowed to do, what needs approval, and how every action is captured as evidence. And most tools don’t have serious eval and regression harnesses without that, you can’t safely improve quality over time.

Business context is the final missing layer

The biggest missed opportunity is business context. Enterprises don't want an agent that only writes code faster. They want an agent that helps ship the right things: bugs creating support load, features blocking deals, recurring incidents, and technical debt that is strategically painful.

When the agent is connected to product systems, support tickets, incident tools, and delivery tracking, it can help prioritise work using real business signals not just technical guesswork. This doesn't replace human judgement, but it gives leadership and teams much better leverage.

The building blocks already exist: strong coding models, secure sandboxing, and emerging tool protocols like MCP. What’s missing is the enterprise layer governance, organisational awareness, deployable architecture, measurable outcomes, and business integration.

The next phase of agentic coding is not about writing more code, but about operating systems. A system operator is an enterprise-aware agent that understands teams, policies, environments, risk, cost, and business priorities and can safely coordinate work across them. As code generation becomes commoditized, durable value moves up the stack to orchestration, governance, and outcomes. Enterprises won’t win with faster prompts, but with agents that help them ship the right software, predictably and with accountability.